End-to-End Speech Synthesis: Revolutionizing AI Voiceovers for Video Producers

Understanding End-to-End Speech Synthesis

Imagine transforming written text into lifelike speech with unprecedented ease. End-to-end (E2E) speech synthesis is making this a reality, revolutionizing ai voiceovers in video production.

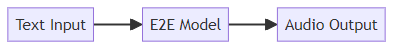

E2E speech synthesis is a method that directly converts text to audio, streamlining the traditional process. Instead of using separate modules for text analysis, acoustic modeling, and vocoding, E2E models handle the entire process in one go. This simplified pipeline can lead to higher quality and more natural-sounding speech. (NaturalVoices Dataset with an Automatic Processing Pipeline)

- Definition: E2E speech synthesis directly converts text to audio, reducing intermediate steps.

- Contrast with Traditional Methods: It eliminates separate modules for text analysis, acoustic modeling, and vocoding.

- Benefits: This approach simplifies the pipeline and can improve the quality and naturalness of speech.

Early speech synthesis systems relied on rule-based approaches, which often sounded robotic and lacked flexibility. (Revolutionizing Text-to-Speech with AI: Human-Like TTS Solutions) Statistical parametric speech synthesis improved naturalness, but it still required manual feature engineering. The deep learning revolution brought E2E models, leveraging neural networks to achieve superior performance.

- Early Rule-Based Systems: These systems had limited naturalness and flexibility.

- Statistical Parametric Speech Synthesis: Naturalness improved, but feature engineering was necessary.

- Deep Learning Revolution: E2E models use neural networks for better performance.

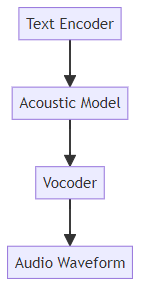

An E2E text-to-speech (TTS) system typically includes three key components. First, the text encoder converts the input text into a meaningful representation. Next, the acoustic model predicts acoustic features from this representation. Finally, a vocoder generates the final audio waveform from the predicted acoustic features.

- Text Encoder: Converts text into a meaningful representation.

- Acoustic Model: Predicts acoustic features from the text representation.

- Vocoder: Generates the final audio waveform from the acoustic features.

The Advantages of E2E Speech Synthesis for Video Producers

Imagine cutting voiceover production costs while achieving a level of naturalness that rivals human recordings. End-to-end (E2E) speech synthesis offers video producers exactly that, unlocking a range of tangible advantages.

E2E models significantly enhance the naturalness and expressiveness of ai-generated voiceovers. This leads to more engaging and believable audio content.

- More Human-Like Voices: E2E models are designed to capture the subtle nuances of human speech, including intonation, rhythm, and emphasis.

- Emotional Control: Advanced models now offer controls for adjusting the emotion and speaking style, allowing video producers to tailor the voiceover to the specific tone of their content.

- Reduced Artifacts: E2E synthesis minimizes robotic or unnatural sounds, delivering a smoother and more polished final product.

E2E speech synthesis streamlines the video production workflow, saving time and resources. This allows video producers to focus on other critical aspects of their projects.

- Simplified Process: E2E models eliminate the need to tweak multiple parameters or modules, simplifying the overall process.

- Faster Turnaround Times: Video producers can quickly generate voiceovers for their projects, accelerating production timelines.

- Easy Integration: E2E solutions are compatible with various video editing software and platforms, offering seamless integration into existing workflows.

The adoption of E2E speech synthesis can lead to significant cost savings and improved scalability for video production teams. This levels the playing field, making high-quality voiceovers accessible to a wider range of creators.

- Reduced Studio Costs: By minimizing the need for professional voice actors and recording studios, E2E synthesis cuts down on production expenses.

- Scalable Voiceover Creation: Easily generate voiceovers for multiple videos or languages, scaling content creation efforts without proportional cost increases.

- Accessibility: E2E technology makes professional-quality voiceovers accessible to smaller production teams with limited budgets.

Popular E2E Speech Synthesis Models and Techniques

E2E speech synthesis models are rapidly evolving, and several have risen to prominence. Understanding these models is crucial for video producers aiming to leverage the best ai voiceovers.

Tacotron and Tacotron 2 are early, influential E2E models. They use a sequence-to-sequence architecture, which means they process the input text sequentially and generate the output audio also in a sequential manner.

- Overview: These models are built upon a sequence-to-sequence architecture, laying the groundwork for subsequent advancements.

- Key Features: They utilize an attention mechanism to align the text and audio, and they predict mel-spectrograms, which are visual representations of the audio's frequency content. According to Tacotron: Towards End-to-End Speech Synthesis, Tacotron achieved a 3.82 subjective 5-scale mean opinion score on US English, outperforming a production parametric system in terms of naturalness. A mean opinion score (MOS) is a subjective measure of listener preference, where higher scores indicate greater naturalness. A production parametric system refers to traditional, non-deep learning speech synthesis methods.

- Limitations: Tacotron models can be slow because of their autoregressive nature, and they often require large datasets to train effectively.

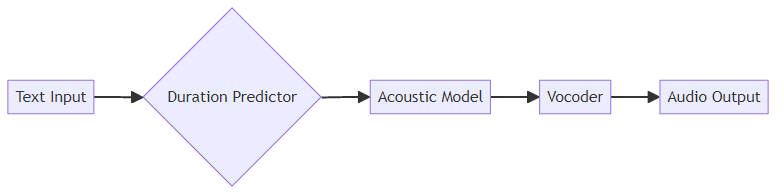

FastSpeech and FastSpeech 2 address the speed limitations of earlier models. These models generate speech in parallel, which makes them significantly faster than Tacotron.

- Non-Autoregressive Models: FastSpeech models generate speech in parallel, which drastically reduces synthesis time.

- Duration Prediction: A key innovation is the accurate prediction of the duration of each phoneme (the smallest unit of sound in speech).

- Improved Robustness: FastSpeech models are more resistant to errors and variations in the input text, leading to more consistent performance.

NaturalSpeech aims to achieve human-level quality in speech synthesis. It leverages variational autoencoders (VAEs) to generate speech waveforms.

- VAE-Based E2E TTS: NaturalSpeech uses VAEs for end-to-end text-to-waveform generation.

- Human-Level Quality: The goal is to create speech that is indistinguishable from human recordings. NaturalSpeech: End-to-End Text to Speech Synthesis with Human-Level Quality claims to achieve human-level quality on a benchmark dataset.

- Key Modules: It incorporates phoneme pre-training, differentiable duration modeling, bidirectional prior/posterior modeling, and a memory mechanism in the VAE.

Common neural network architectures like recurrent neural networks (RNNs) and Transformer networks are often used in E2E TTS models, enabling them to process sequential data effectively and capture long-range dependencies.

Implementing E2E Speech Synthesis in Your Video Production Workflow

Ready to bring E2E speech synthesis into your video production? Successfully implementing this technology requires a strategic approach to ensure optimal results.

Selecting the right platform is the first step. Consider factors such as voice quality, language support, customization options, and pricing.

- Voice Quality: Evaluate the naturalness and clarity of the voices offered. High-quality voices are essential for engaging your audience.

- Language Support: Ensure the platform supports the languages you need for your video projects. Multilingual capabilities can significantly expand your reach.

- Customization Options: Look for platforms that allow you to adjust parameters like pitch, speed, and emphasis. This level of control enables you to tailor the voiceover to fit your specific needs.

- Pricing: Compare the pricing models of different platforms to find one that aligns with your budget and usage patterns. Some platforms offer pay-as-you-go options, while others have subscription-based plans.

Popular platforms include Google Cloud Text-to-Speech, Amazon Polly, and Microsoft Azure AI Speech. Each offers a range of features and pricing options. Exploring open-source E2E TTS libraries and models can also be a cost-effective alternative for those with technical expertise.

Customization is key to creating unique and engaging voiceovers. You can use techniques like voice cloning, parameter adjustments, and SSML to fine-tune your audio.

- Voice Cloning: Create a digital replica of your own voice or hire a voice actor to develop a custom voice. This adds a personal touch to your videos.

- Parameter Adjustments: Fine-tune parameters such as pitch, speed, and emphasis to match the tone and style of your video content. Experiment with different settings to achieve the desired effect.

- SSML (Speech Synthesis Markup Language): Use SSML tags to control pronunciation, intonation, and other aspects of speech. SSML provides a powerful way to add nuance and expressiveness to your voiceovers. For example, you can use it like this:

<speak>This is a <emphasis level="strong">very</emphasis> important point.</speak>to make "very" stand out.

Seamless integration with your video editing software is crucial for a smooth workflow. Different integration methods offer varying levels of flexibility and control.

- Direct Integration: Some video editors offer built-in TTS capabilities, allowing you to generate voiceovers directly within the editing environment.

- API Integration: Use apis to connect TTS platforms with your editing workflow. This approach provides greater flexibility and control over the synthesis process.

- File Import: Generate audio files using a TTS platform and import them into your video project. This straightforward method works with virtually any video editing software.

By carefully selecting the right platform, customizing voices, and integrating TTS into your workflow, you can create professional-quality voiceovers efficiently.

Kveeky: Your AI Voiceover Solution

Ready to transform your video content with ai voiceovers? Kveeky provides a streamlined solution for video producers seeking high-quality, lifelike voiceovers. Kveeky utilizes advanced End-to-End (E2E) speech synthesis models, such as those based on architectures like FastSpeech or NaturalSpeech, to deliver its high-quality ai voiceovers. This E2E approach ensures a streamlined process from text to lifelike audio.

Kveeky offers a user-friendly platform for generating high-quality ai voiceovers. Turn written text into engaging audio effortlessly.

Customize voice options to match your project's needs. Achieve the perfect tone and style for your content.

Simplify your video production workflow and elevate your content with Kveeky. Create professional voiceovers in minutes, saving time and resources.

ai scriptwriting services help you craft compelling narratives. Overcome writer's block and create engaging content with ai assistance.

Voiceover services are available in multiple languages for global reach. Expand your audience and connect with viewers worldwide.

Customize voice options to match your brand and content style. Create a unique audio identity that resonates with your target audience.

Text-to-speech generation provides quick and easy voiceover creation. Simply input your script and generate a high-quality voiceover in seconds.

A user-friendly interface ensures seamless script and voice selection. Navigate the platform easily and create voiceovers with minimal effort.

Try Kveeky for free with no credit card required. Experience the power of ai voiceovers without any commitment.

Save time and resources with ai-powered voiceover generation. Reduce production costs and accelerate your workflow.

Achieve professional-quality results without expensive voice actors. Access high-quality voiceovers at a fraction of the traditional cost.

Easily create engaging and accessible content for your audience. Enhance the viewing experience with clear, natural-sounding voiceovers.

Discover Kveeky, the ai voiceover tool that turns scripts into lifelike voiceovers with ease. Transform your content today!

With Kveeky, you can produce engaging voiceovers that enhance your videos and captivate your audience.

Challenges and Future Directions in E2E Speech Synthesis

Even with the impressive strides in E2E speech synthesis, challenges remain before it can truly mirror human speech in all its complexity. Addressing these challenges is key to unlocking the full potential of ai voiceovers.

E2E models thrive on data. They typically require vast amounts of training data to achieve high-quality speech synthesis. This can be a hurdle, especially when creating niche voices or for low-resource languages.

- Need for Large Datasets: E2E models' performance often correlates with the size and quality of the training data. Gathering and curating these datasets can be resource-intensive.

- Domain Adaptation: Performance can dip when models encounter new domains or speaking styles not seen during training. Imagine a model trained on news articles struggling with a children’s story.

- Low-Resource Languages: Developing E2E systems for languages with limited data is a significant challenge. Creative techniques like transfer learning and data augmentation are essential.

While E2E models excel at natural-sounding speech, controlling the nuances remains an area for improvement. This includes fine-tuning emotions and speaking styles.

- Fine-Grained Control: Video producers need the ability to adjust specific aspects of speech, like emotion, prosody, and emphasis. This level of control ensures the voiceover aligns perfectly with the video's tone.

- Disentangled Representations: Separating different factors of variation in speech (e.g., emotion, accent, speaking rate) allows for more targeted control. This can lead to more customizable and expressive voiceovers.

- Zero-Shot Voice Cloning: The ability to clone voices with minimal training data opens exciting possibilities. This could allow video producers to quickly create custom voices for their projects. While it's an active research area with varying degrees of success, the immediate next steps involve improving the robustness and naturalness of cloned voices, making them more readily applicable in production settings.

Real-world text is messy. E2E systems need to handle disfluencies, typos, and other imperfections gracefully.

- Handling Disfluencies: Spontaneous speech often includes hesitations ("um," "ah") and self-corrections. E2E models need to handle these disfluencies without sounding unnatural.

- Dealing with Noisy Text: Robustness to typos, abbreviations, and unusual characters is essential for real-world applications. A model should still produce reasonable speech even with imperfect input.

- Adversarial Attacks: As E2E systems become more sophisticated, protecting them from malicious inputs is crucial. Ensuring the model is resilient to adversarial attacks maintains its integrity.

Addressing these challenges will drive the future of E2E speech synthesis, leading to even more realistic and versatile ai voiceovers.

Conclusion: The Future of Voiceovers is Here

The rise of E2E speech synthesis marks a pivotal shift in video production. This technology reshapes how video producers approach voiceovers, making the process more efficient and creative.

- Transformative Technology: E2E TTS transforms how video producers create voiceovers. It offers new levels of efficiency and quality.

- Increased Efficiency: This method streamlines production, reducing costs for video creators. It allows smaller teams to produce professional-grade voiceovers.

- Enhanced Creativity: E2E synthesis unlocks voice customization and expressiveness. Producers can tailor ai voices to match specific content needs.

As E2E speech synthesis becomes more accessible, it empowers video producers to create engaging content. It also allows for greater control over the final product.

- Stay Ahead of the Curve: Adopt E2E speech synthesis to enhance your video content. Keep pace with advancements in ai-driven tools.

- Experiment with Different Platforms and Techniques: Explore various solutions to find the best fit for your projects. Tailor your approach to meet specific requirements.

- Unlock New Levels of Engagement: Create captivating voiceovers that resonate with your audience. High-quality audio enhances the viewing experience.

E2E speech synthesis is not just a technological advancement; it's a creative tool. It allows video producers to explore new audio possibilities.