Deep Dive into Tacotron Architectures for AI Voiceover

Introduction to Tacotron and AI Voiceover

AI voiceover is rapidly changing how videos are produced, offering new levels of efficiency. But how do these AI voices actually work?

The demand for efficient and cost-effective voiceover solutions is rising. AI voiceover offers a solution by reducing production time and costs. For instance, e-learning platforms can quickly update course narration, and marketing teams can produce multilingual ad campaigns without hiring multiple voice actors.

AI voiceover is a game-changer for scalability and content creation speed. Businesses can generate voiceovers for thousands of product descriptions, personalize audio content for individual users, and automate audio production for news reports.

Traditional voiceover approaches involve hiring voice actors, booking studio time, and managing post-production. AI voiceover streamlines this process, letting creators generate high-quality audio from text in minutes.

Tacotron is a neural network architecture that converts text into speech. It is designed to generate natural-sounding speech directly from raw text input.

Tacotron uses deep learning to understand the nuances of language, including phonetics, intonation, and stress. (An end-to-end Tacotron model versus pre trained ...) This helps it produce speech that is more human-like than earlier text-to-speech systems.

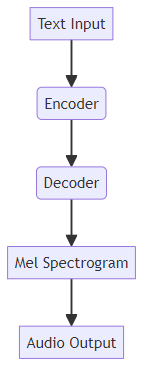

Tacotron utilizes an encoder-decoder structure. The encoder transforms the input text into a feature representation, and the decoder generates a mel spectrogram, which is then converted into audio Tacotron2 architecture - learnius

As AI voiceover technology advances, models like Tacotron will become even more integral to content creation. Building on this foundation, we'll explore the Tacotron architecture in greater detail.

Tacotron Architecture: A Detailed Breakdown

AI voiceovers are revolutionizing content creation, but what makes them sound so human? The secret lies in the Tacotron architecture, a sophisticated neural network that meticulously converts text into speech.

The encoder is the first critical component in the Tacotron architecture. It transforms text characters into embedded sequences using natural language processing (NLP) tools. NLP techniques help the model understand the context and nuances of the text, accounting for phonetics and word relationships.

- Role of the encoder: Converts text input into a sequence of vector representations. This involves transforming each character or word into a high-dimensional vector that captures its meaning and phonetic properties.

- NLP tools and techniques: Employs techniques like tokenization, embedding layers, and recurrent neural networks (RNNs) to process the text. These tools help the model understand the context and relationships between words.

- Hidden feature representation: The encoder outputs a hidden feature representation, a compressed and information-rich version of the input text. This representation captures the essential characteristics of the text, which the decoder then uses to generate speech.

Next is the decoder, an autoregressive recurrent neural network. The decoder predicts mel spectrogram frames, which are visual representations of audio data in the time-frequency domain.

- Autoregressive RNN: The decoder predicts one mel spectrogram frame at a time, using its previous predictions as input for the next step. This autoregressive process allows the model to generate a sequence of frames that form a coherent and natural-sounding mel spectrogram.

- Mel spectrograms: Mel spectrograms provide a way to visualize the frequency content of audio over time, with the frequency axis scaled according to human perception (the mel scale). This perceptual scaling helps the model better represent sounds that humans can distinguish. They serve as an intermediate representation between the encoded text and the final audio waveform.

- Decoding process: The decoder takes the hidden feature representation from the encoder and generates a sequence of mel spectrogram frames. Each frame represents a short segment of the audio, capturing its frequency characteristics.

The final stage involves using a vocoder (like WaveNet or WaveGlow) to convert the mel spectrograms into audio waveforms. This step transforms the frequency-domain representation back into the time domain, creating the actual sound.

- Vocoders (e.g., WaveNet, WaveGlow): These are specialized neural networks designed to generate raw audio waveforms from spectral representations like mel spectrograms. WaveNet, for instance, is a deep neural network that generates raw audio waveforms. Other vocoders can also be used, each with its own strengths and weaknesses.

- Spectral Reconstruction: Converting from a mel spectrogram to an audio waveform typically involves a process related to the Inverse Short-Time Fourier Transform (ISTFT). While a direct inverse Fourier Transform converts between time and frequency domains, reconstructing a full audio waveform from a mel spectrogram involves more complex spectral reconstruction techniques that account for the mel scaling and phase information. This process reconstructs the audio signal from its spectral components.

- Training the feature prediction network and vocoder separately: The feature prediction network (encoder-decoder) and the vocoder are often trained separately. This modular approach helps ensure coherent waveform alignment and can lead to more natural and stable audio.

The Tacotron architecture's modular design allows for ongoing improvements across each stage, leading to increasingly realistic AI voiceovers. To further illustrate, we'll dive into the specifics of the encoder and its role in the Tacotron architecture.

Tacotron 2: Enhancements and Improvements

Tacotron 2 improves upon the original Tacotron, delivering more natural and higher-quality speech. What makes this evolution so significant for AI voiceover technology?

Tacotron 2 marks a significant leap in text-to-speech (TTS) technology. It offers noticeable improvements in the naturalness and overall quality of the generated speech compared to the original Tacotron.

- A key enhancement in Tacotron 2 is the use of attention mechanisms. These mechanisms ensure better alignment between the input text and the generated audio, leading to more coherent and natural-sounding speech.

- The architecture of Tacotron 2 features notable differences in its encoder, decoder, and vocoder components. These changes contribute to the model's enhanced performance and efficiency.

- For example, in e-learning, Tacotron 2 can produce clearer and more engaging narration for online courses. For marketing, it allows for creating voiceovers that better capture the nuances of human speech.

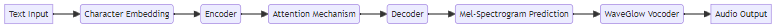

Tacotron 2 employs a sophisticated architecture to convert text into speech. Each component plays a crucial role in the overall process.

- Encoder: The encoder begins by embedding the input characters and processing them to create a contextual representation of the text. This involves converting each character into a vector that captures its phonetic and semantic properties.

- Attention Mechanism: The attention mechanism aligns the encoded text features with the audio frames. This alignment ensures that the generated speech accurately reflects the input text, improving clarity and coherence.

- Decoder: The decoder then predicts mel-spectrogram frames based on the aligned text features. These frames represent the frequency content of the audio over time and serve as an intermediate representation between the text and the final audio.

- Vocoder (WaveGlow): Finally, a vocoder like WaveGlow synthesizes the audio waveform from the predicted mel-spectrogram. This step transforms the frequency-domain representation into the time domain, producing the actual audio signal.

Regularization techniques are crucial for preventing overfitting and improving the generalization of deep learning models. Tacotron 2 implementations often use different regularization methods.

- Dropout and Zoneout are two such techniques. Dropout randomly sets a fraction of the input units to zero during training to prevent reliance on specific neurons. Zoneout, on the other hand, randomly replaces a fraction of the hidden units with their previous values. This helps preserve temporal dependencies in recurrent connections, encouraging the model to learn more robust sequential patterns rather than relying on a single timestep's information.

- Some implementations of Tacotron 2 prefer Dropout over Zoneout due to its simplicity and effectiveness. Dropout is easier to implement and can provide similar or better results in certain scenarios.

- For example, Tacotron 2 – PyTorch uses Dropout instead of Zoneout to regularize the LSTM layers.

These architectural and regularization choices help Tacotron 2 achieve state-of-the-art performance in text-to-speech synthesis. Moving beyond the architecture, let's examine the encoder component of Tacotron 2 in more detail.

The Role of WaveGlow in Tacotron 2

Ever wondered how AI generates speech that sounds almost human? WaveGlow, a type of vocoder, plays a crucial role in Tacotron 2 by converting mel spectrograms into realistic audio.

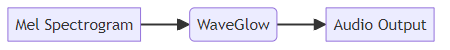

WaveGlow steps in to replace earlier vocoders like WaveNet for waveform synthesis. It efficiently generates high-quality audio from mel spectrograms. This enhances the overall naturalness of the AI-generated voice.

- Tacotron 2 uses WaveGlow to transform mel spectrograms into audio, improving speed and quality.

- WaveGlow's architecture enables faster audio generation than WaveNet, making it suitable for real-time applications.

- Unlike some other vocoders that rely on autoregressive models (generating one sample at a time), WaveGlow is a flow-based generative model. This makes it more efficient without sacrificing audio quality.

WaveGlow functions as a flow-based generative model for audio creation. It transforms data distribution into a Gaussian distribution using a series of invertible transformations (flows). This process allows for the generation of new audio samples by reversing the flow.

- WaveGlow employs invertible convolutions, enabling the model to map complex data distributions to simpler ones.

- Affine coupling layers in WaveGlow help transform the input data into a Gaussian distribution, streamlining the generation process.

- During inference, WaveGlow inverts the transformation process, generating audio samples from the Gaussian distribution conditioned on the mel spectrogram.

WaveGlow's unique architecture and flow-based approach make it a powerful tool for generating high-quality audio in Tacotron 2. By efficiently converting mel spectrograms into realistic waveforms, WaveGlow contributes significantly to the naturalness of AI voiceovers.

To further understand how these models are put into practice, let's delve into the specifics of training and implementation.

Training and Implementation

Training AI models like Tacotron and Tacotron 2 is a complex yet fascinating process. How do developers ensure these models produce high-quality, natural-sounding speech?

Training Tacotron models requires extensive datasets of speech and corresponding text. The LJ Speech dataset is a common choice, offering high-quality recordings of a single speaker.

- Text normalization is a crucial preprocessing step. This involves converting raw text into a consistent format that the model can understand, handling abbreviations, numbers, and special characters.

- Audio preprocessing techniques, such as converting audio to a specific sample rate and normalizing the volume, also play a vital role. These steps ensure consistency across the dataset.

- High-quality data is essential for optimal model performance. Noisy or poorly transcribed data can lead to lower-quality speech synthesis.

Mixed precision training can significantly speed up the training process. It also reduces memory usage by using lower precision (e.g., FP16) for certain calculations.

- Automatic Mixed Precision (AMP) is a technique used to leverage mixed precision training in PyTorch. AMP automatically handles the precision of different operations, optimizing performance without sacrificing accuracy Tacotron 2 – PyTorch.

- Optimizing model parameters and hyperparameters is crucial for achieving the best results. Techniques like grid search or Bayesian optimization can help find the optimal settings.

- Multi-GPU training and distributed data parallelism can further accelerate training by distributing the workload across multiple devices. This is especially useful for large datasets and complex models.

Generating speech with pre-trained Tacotron 2 and WaveGlow models involves a few key steps. Here’s a simplified example using PyTorch:

import torch

# Load the pre-trained Tacotron 2 model

tacotron2 = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_tacotron2', model_math='fp16')

tacotron2 = tacotron2.to('cuda') # Move model to GPU

tacotron2.eval() # Set model to evaluation mode

# Load the pre-trained WaveGlow vocoder

waveglow = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_waveglow', model_math='fp16')

waveglow = waveglow.remove_weightnorm(waveglow) # Remove weight normalization for inference

waveglow = waveglow.to('cuda') # Move model to GPU

waveglow.eval() # Set model to evaluation mode

text = "Hello world, I missed you so much." # The input text for speech synthesis

utils = torch.hub.load('NVIDIA/DeepLearningExamples:torchhub', 'nvidia_tts_utils')

sequences, lengths = utils.prepare_input_sequence([text]) # Prepare the text input into a format the model understands

with torch.no_grad(): # Disable gradient calculation for inference

mel, _, _ = tacotron2.infer(sequences, lengths) # Infer mel spectrogram from text

audio = waveglow.infer(mel) # Synthesize audio waveform from mel spectrogram

audio_numpy = audio[0].data.cpu().numpy() # Convert audio tensor to numpy array

rate = 22050 # Set the audio sample rate

from scipy.io.wavfile import write # Import function to write WAV files

write("audio.wav", rate, audio_numpy) # Save the synthesized audio to a WAV file

This code snippet demonstrates how to load pre-trained models, prepare the input text, generate mel spectrograms, synthesize audio, and save the output to a file. A more detailed breakdown of each step reveals how the text is transformed through the Tacotron 2 model into a mel spectrogram, which is then fed into the WaveGlow vocoder to produce the final audio waveform.

Proper training and implementation are essential for creating high-quality AI voiceovers using Tacotron architectures. Now, let's look at how these models can be applied in video production.

Applications and Use Cases in Video Production

AI voiceovers are transforming video production, but how can you apply this technology effectively? Tacotron architectures offer diverse applications, from automating explainer videos to creating multilingual content.

Kveeky is an example of a service that leverages AI voice generation technologies, potentially including Tacotron architectures, to offer AI scriptwriting, voiceover services in multiple languages, and customizable voice options. It also features text-to-speech generation and a user-friendly interface. A free trial is available without requiring a credit card.

Tools like Kveeky transform scripts into lifelike voiceovers with ease. This makes it simple to produce professional-sounding audio for various video projects.

Consider using such services to enhance your video content with high-quality AI voiceovers.

AI voiceovers enable the generation of voiceovers for explainer videos without human intervention. This automation significantly reduces production time.

You can customize voice styles and tones to match the video's theme. This ensures the voiceover complements the visual content and maintains a consistent brand voice.

This approach reduces production costs and turnaround time. Businesses can quickly create and update explainer videos, keeping their content fresh and relevant.

Creating voiceovers in multiple languages helps reach international audiences. This is especially valuable for businesses expanding into new markets.

AI models can adapt voice styles and accents to different regions. This ensures the voiceover resonates with local viewers, enhancing engagement.

By using multilingual voiceovers, you can expand the reach and impact of video content. This can lead to increased brand awareness and customer acquisition in global markets.

Tacotron-based AI voiceovers provide versatile solutions for modern video production. Looking ahead, let's explore some future trends and challenges in this field.

Future Trends and Challenges

The future of AI voiceover is rapidly evolving. What trends and challenges lie ahead for Tacotron architectures?

Increasing Naturalness and Expressiveness: We can expect AI speech to become even more natural and expressive. Advancements in model architectures and training data will allow for more nuanced prosody, better control over emotional tone, and a wider range of vocal styles, making AI voices virtually indistinguishable from human ones.

Emotional Nuances and Personalization: AI models will likely incorporate a deeper understanding of emotions and context, enabling them to deliver speech with specific emotional inflections. This will lead to more personalized audio content, tailored to individual listener preferences or the specific mood of a video.

Adaptability to Diverse Content Needs: Developers will create more robust models that can be easily adapted to various content needs, from audiobook narration and podcasting to character voices in games and virtual assistants. This adaptability will involve fine-tuning models for specific speaking styles, accents, and even unique character personas.

Ensuring Transparency: Ensuring transparency in AI voiceover usage remains crucial. It's important for listeners to know when they are interacting with an AI-generated voice, especially in sensitive contexts like news reporting or customer service. Clear labeling and disclosure mechanisms will be key.

Protecting Against Misuse (Deepfakes): Protecting against misuse, such as the creation of malicious deepfakes or the impersonation of individuals, is an essential challenge. Developing robust detection methods and ethical guidelines for AI voice generation will be paramount.

Responsible AI Development and Deployment: Prioritizing responsible AI development and deployment means considering the ethical implications at every stage. This includes addressing potential biases in training data, ensuring fair access to the technology, and establishing clear accountability frameworks for its use.

AI voiceover technology will continue to advance rapidly, presenting both exciting opportunities and significant responsibilities.