Unlocking Clarity: A Video Producer's Guide to Voice Source Separation in AI Voiceover

Unlocking Clarity: A Video Producer's Guide to Voice Source Separation in AI Voiceover

Introduction to Voice Source Separation

It's no secret that audio quality can make or break a video. Imagine trying to decipher a crucial line of dialogue buried under a cacophony of background noise.

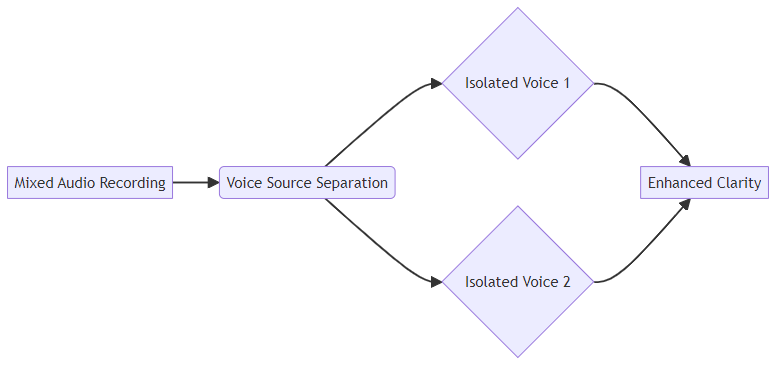

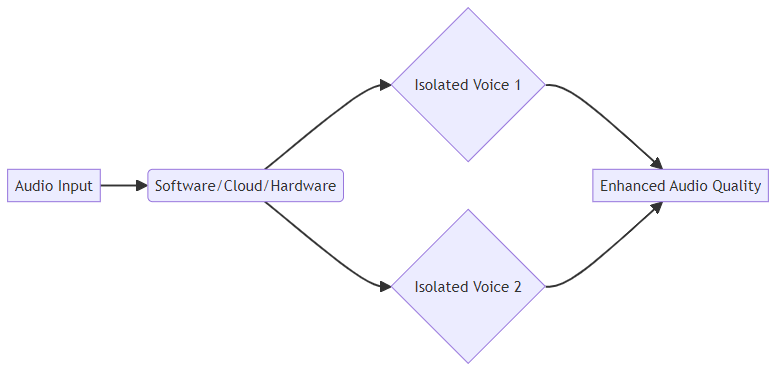

Voice source separation is the process of isolating individual voices from a mixed audio recording. Think of it as digitally untangling a knot of overlapping sounds. Mathematically, this can be represented as y(t) = Σ(x_i(t)), where y(t) is the mixed audio signal and x_i(t) represents the individual voice sources. This is a simplified model of how sounds mix, and the actual separation process is more complex. This technology is particularly useful when dealing with audio where multiple people are speaking simultaneously or where background noise is present.

Here's why video producers should care about voice source separation:

- Improved audio clarity: Clean up messy audio and deliver crystal-clear voiceovers. For instance, in e-learning, you can isolate the instructor's voice from background music, ensuring students focus on the lesson.

- Reduced editing time: Isolate the exact audio you need, saving hours of manual editing. A video producer working on a documentary, for example, can quickly extract specific interview segments.

- Enhanced accessibility: Make your content more accessible by creating separate dialogue tracks. By separating dialogue from music and sound effects, you allow viewers to focus on the spoken content.

Despite its potential, voice source separation isn't a perfect science. Here are some challenges:

- Overlapping Frequencies: Human voices often share similar frequency ranges, making it difficult to distinguish them.

- Variations in Speech: Accents and speech patterns add complexity to the separation process.

- Computational Complexity: Real-time voice separation demands significant processing power.

As shown above, voice source separation can isolate voices to improve their clarity.

Understanding these challenges is the first step toward leveraging voice source separation effectively, which we'll cover in the next section.

Traditional vs. AI-Powered Voice Source Separation

Did you know that early attempts at voice separation relied on techniques as simple as adjusting microphone positions? Today, the field has leaped forward, embracing the power of artificial intelligence.

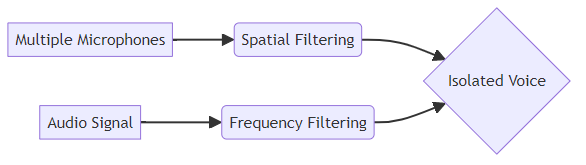

Traditional voice source separation methods often depend on spatial or frequency-based filtering. Spatial filtering uses multiple microphones to capture sound from different locations, isolating voices based on their point of origin. Frequency-based techniques, on the other hand, isolate voices by targeting specific frequency ranges.

- Spatial filtering is like focusing a camera on a specific spot. However, this method needs multiple microphones and controlled environments, which adds to the cost and complexity.

- Frequency-based filtering is more flexible. However, it struggles when voices overlap in frequency, limiting its effectiveness in complex audio environments.

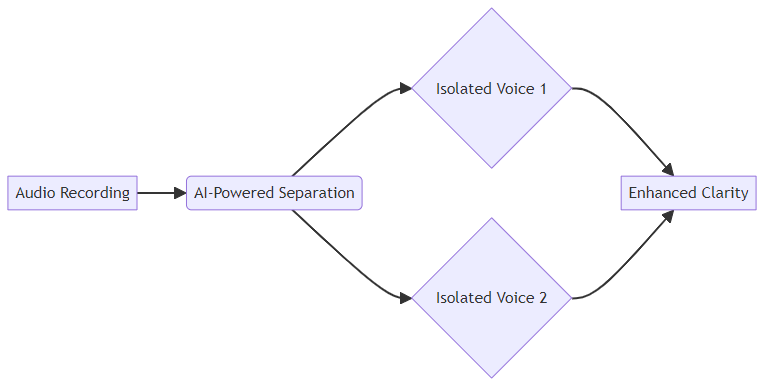

AI-powered voice source separation marks a significant leap. These methods use deep learning models to recognize complex patterns in audio, achieving impressive results even with single-microphone recordings.

- Deep learning models analyze audio and identify unique characteristics of individual voices. This allows for more accurate separation, even when voices overlap.

- Blind Source Separation (BSS) algorithms are a type of signal processing technique that aims to separate a set of mixed signals into their original source signals without any prior knowledge of the sources or the mixing process. In the context of AI, these algorithms are often implemented using neural networks that learn to identify and disentangle the individual audio streams.

- Supervised learning involves training ai models with labeled data. The models learn to differentiate between different voices and isolate them accordingly.

Humans can focus on a single voice in a noisy environment, a phenomenon known as the "cocktail party effect." ai-powered systems now mimic this ability.

- Neural networks act like the human brain, learning to filter out distractions and focus on specific audio sources.

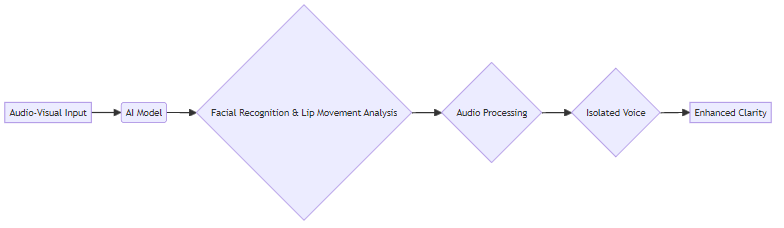

- Visual cues, such as lip movements, help ai systems resolve the permutation problem. The permutation problem in source separation refers to the ambiguity in identifying which separated output corresponds to which original source when multiple sources are present. For example, if you have two speakers, the algorithm might output "Speaker A's voice, Speaker B's voice" or "Speaker B's voice, Speaker A's voice." Visual cues, like tracking lip movements, help the ai correctly associate the audio stream with the visual speaker over time, thus resolving this ambiguity and ensuring voices are correctly identified.

As ai continues to evolve, voice source separation promises even more refined and practical applications.

AI Voiceover and Text-to-Speech: Kveeky

Is creating high-quality voiceovers taking up too much of your time and resources? Kveeky offers an ai-powered solution to streamline your video production workflow.

Kveeky is an ai voiceover tool designed to help video producers create lifelike voiceovers quickly and efficiently. You can easily transform scripts into engaging audio using customizable voice options.

Kveeky Features and Customization

Kveeky provides a user-friendly platform that simplifies the process of creating professional voiceovers, allowing video producers to focus on other critical aspects of their projects.

- Lifelike Voiceovers from Scripts: Create engaging audio effortlessly from scripts using a wide range of customizable voice options, styles, and accents to match your brand and content.

- Multilingual Support: Kveeky supports multiple languages, making your content accessible to a global audience and significantly increasing your viewership and engagement.

- Intuitive Interface: The platform's user-friendly design simplifies script and voice selection, reducing the learning curve and accelerating the production process.

- Free Trial: Enjoy a free trial with no credit card required to experience the future of voiceovers.

Voice source separation can enhance the quality and clarity of ai voiceovers generated by tools like Kveeky. By isolating speech from background noise, you can improve the accuracy of voice cloning and text-to-speech (TTS) synthesis.

- Improved AI Voice Cloning: Using separated audio ensures that the ai model captures the nuances of the voice, resulting in a more authentic and natural-sounding clone.

- Enhanced TTS Clarity: Removing background noise from training data leads to clearer, more intelligible TTS output, especially useful in noisy environments.

- More Natural Voiceovers: Separating speech from other audio elements allows for better control over pacing, intonation, and emotional inflection, resulting in a more engaging and human-like voiceover.

Kveeky provides extensive customization options to fine-tune your ai voiceovers:

- Adjusting Tone, Pitch, and Speed: Fine-tune these parameters to achieve the desired vocal style that aligns perfectly with your video content.

- Adding Pauses, Emphasis, and Emotion: Incorporate natural-sounding pauses and emphasis to make the voiceover more engaging, mimicking human speech patterns.

- Creating Unique Voice Profiles: Develop distinct voice profiles for different characters or brands, adding depth and consistency to your video content.

By leveraging ai-powered voice source separation and customization, you can elevate the quality of your video projects. Next, we'll explore how to tackle common challenges in voice source separation.

Practical Applications in Video Production

Voice source separation isn't just a theoretical concept; it's a practical tool that's reshaping video production workflows. Let's explore how video producers can use this technology to enhance their projects.

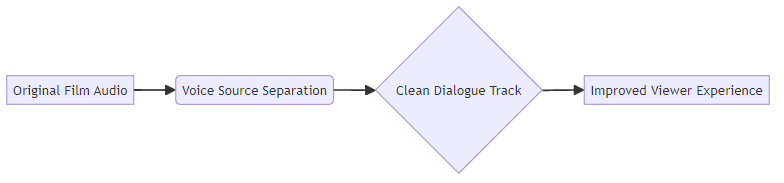

Voice source separation can significantly improve the viewing experience.

- Isolating Actors' Voices: From background scores and sound effects ensures dialogue remains clear and intelligible, even in scenes with complex audio layering.

- Improving Intelligibility in Noisy Scenes: This is crucial for maintaining audience engagement. Separating speech from ambient noise makes it easier to follow the story.

- Reducing the Need for ADR (Automated Dialogue Replacement): Saves time and resources. By cleaning up the original audio, video producers can avoid re-recording dialogue in a studio.

In educational content, clear audio is essential for effective learning.

- Separating Narration from Background Music and Sound Effects: Eliminates distractions. This ensures viewers focus on the key information being presented.

- Creating a Professional and Polished Audio Experience: Enhances credibility. High-quality audio makes the content more engaging and trustworthy.

- Ensuring Viewers Focus on Key Information: Improves knowledge retention. By reducing auditory clutter, viewers can better absorb and remember the material.

Voice source separation is a game-changer for spoken-word content.

- Removing Ambient Noise and Overlapping Speech: Improves audio clarity. This makes interviews and podcasts more enjoyable to listen to.

- Isolating Individual Speakers: For better editing control streamlines the post-production process. Editors can easily adjust levels and apply effects to each voice independently.

- Creating a More Engaging and Listenable Audio Experience: Boosts audience retention. Clean audio keeps listeners focused on the content, not the distractions.

As ai-powered voice source separation continues to advance, expect even more innovative applications. Next, we'll explore how to tackle common challenges in voice source separation.

Tools and Technologies for Voice Source Separation

Isolating individual voices from a mixed audio recording can feel like searching for a needle in a haystack. Fortunately, tools and technologies are available to help video producers separate voice sources with accuracy and efficiency.

Ready to roll up your sleeves and dive into the code? Several software libraries and frameworks empower video producers to develop custom voice source separation solutions.

- TensorFlow and PyTorch are open-source machine learning platforms. They provide the flexibility to design, train, and deploy custom models tailored to specific audio characteristics.

- Librosa is a Python library useful for audio analysis and feature extraction. It simplifies tasks like loading audio files, computing spectrograms, and extracting relevant features for voice separation.

- Open-Unmix and ConvTasNet offer pre-trained models for voice source separation. Open-Unmix is a popular open-source library for music source separation, often used as a baseline for research and development. ConvTasNet (Convolutional Temporal Convolutional Network) is a deep learning model architecture specifically designed for audio source separation tasks, known for its effectiveness in separating various audio sources, including speech. These models can be fine-tuned for specific applications, saving time and resources on training from scratch.

Need a quick and easy solution without the hassle of managing infrastructure? Cloud-based voice separation services offer convenient tools for video producers.

- API Access: Allows for seamless integration of voice separation capabilities into existing video editing workflows, enabling automated processing of large volumes of audio.

- Scalable Processing: Ensures efficient handling of large audio files, critical for video projects involving extensive footage. Cloud services can process audio faster than local machines.

- Cost-Effective Solutions: Pay-as-you-go pricing models make cloud services an attractive option for video producers who don't require continuous voice separation.

For video producers working with live streaming or broadcasting, real-time voice separation is essential. Hardware accelerators provide the necessary processing power.

- GPUs (Graphics Processing Units) are valuable because they can process audio data in parallel, speeding up computationally intensive tasks like deep learning-based voice separation.

- Specialized audio processing units (APUs) are designed to handle audio-specific tasks efficiently, optimizing performance for real-time audio processing.

- Optimized Performance: Crucial for live streaming and broadcasting to reduce latency and maintain audio quality, ensuring a seamless experience for viewers.

Choosing the right tools depends on the specific needs of your video production workflow.

Now that we've explored the available tools, let's delve into the common challenges in voice source separation and how to overcome them.

Advanced Techniques and Future Trends

Voice source separation is rapidly evolving, and the future holds exciting possibilities for video producers. Imagine a world where isolating individual voices is as simple as clicking a button.

Spatial Audio and Binaural Separation

Spatial audio and binaural separation are set to revolutionize audio production.

- HRTFs (Head-Related Transfer Functions) are crucial for creating immersive and realistic sound experiences. By using HRTFs, audio can be tailored to an individual's unique head and ear shape, offering a personalized audio experience.

- Separating voices based on their spatial location becomes more precise. This allows video producers to create audio that accurately reflects the position of sound sources in a scene.

- The result is enhanced immersive audio experiences for VR and AR. Imagine viewers feeling as though they are truly present in the scene, hearing sounds from all directions.

Self-Supervised Learning and Generative Models

Self-supervised learning is changing the game by reducing reliance on labeled data.

- Models can be trained without extensive labeled datasets, significantly reducing the time and resources needed to develop accurate voice separation systems.

- Generative models and adversarial networks play a key role. These advanced techniques allow ai to learn from unlabeled data, improving the ability to separate voices in complex audio environments. They do this by creating synthetic data or by having models compete against each other to generate more realistic outputs, effectively learning the underlying structure of audio without explicit labels.

- Systems can adapt to new audio environments and speakers more efficiently, which is particularly useful in dynamic settings where the audio characteristics change frequently.

Combining Audio and Visual Cues

Combining audio and visual cues can dramatically improve separation accuracy.

- Facial recognition and lip movement analysis are becoming increasingly important. ai systems can use visual information to identify and isolate individual speakers, even in noisy environments.

- Separation accuracy sees improvement in noisy environments. This is especially beneficial for video producers working with live recordings or outdoor footage.

- The result is more robust and reliable voice isolation systems that can handle a wide range of challenging audio conditions.

As technology continues to advance, video producers can look forward to more sophisticated and user-friendly voice source separation tools.

Conclusion: The Future of Audio Clarity

Imagine a future where audio is pristine, regardless of the recording environment. ai-powered voice source separation is rapidly making this a reality for video producers.

Improved Audio Quality and Clarity: By isolating individual voices, you can eliminate background noise and distractions. This is particularly useful in documentaries or interviews where ambient sounds can detract from the speaker's message.

Reduced Editing Time and Costs: Quickly extract the audio you need without manual adjustments. This allows editors to focus on creative aspects of the project rather than spending hours cleaning up audio.

Enhanced Accessibility and User Experience: Create separate dialogue tracks to cater to a broader audience. By isolating voices, you can provide subtitles or transcripts, which makes content more accessible to viewers with hearing impairments.

Staying Ahead of the Curve with Innovative Tools: Adopt ai-driven solutions to improve audio quality and streamline your workflow. Video producers can use tools like Kveeky to generate lifelike voiceovers and easily remove background noise.

Leveraging AI to Create High-Quality Video Content: ai assists in producing clear, professional audio, which helps enhance the overall viewing experience and maintain audience engagement.

Exploring New Possibilities for Audio Storytelling: Experiment with spatial audio and binaural separation techniques. As mentioned earlier, Head-Related Transfer Functions (HRTFs) offer a personalized audio experience.

Continuous Advancements in AI and Machine Learning: These improvements will bring even more refined and practical applications. ai systems can leverage facial recognition and lip movement analysis to isolate individual speakers in noisy environments.

The Potential for Fully Automated Audio Workflows: Imagine a future where ai handles all aspects of audio processing, from noise reduction to voice cloning, all with minimal human intervention.

A Future Where Audio Clarity is the Standard, Not the Exception: ai will transform audio quality in video production. The goal is to make crystal-clear audio accessible to all creators.

The future of audio clarity is bright, and ai-powered voice source separation will play a central role. Explore advanced techniques and innovative tools to stay at the forefront of this exciting field.